Otter.ai Faces Class Action Lawsuit Over Unauthorized Recording and Data Use

A California lawsuit alleges Otter.ai records meeting participants without consent and uses conversations to train AI models, raising major privacy concerns for both users and non-users

Otter.ai Accused of Recording Without Consent

A complaint filed in California district court alleges that Otter.ai records users and non-users without obtaining the required consent. The lawsuit claims that voices and conversations are being used to train speech recognition models in violation of California law, the Electronic Communications Privacy Act, and the Computer Fraud and Abuse Act. The case was filed on behalf of plaintiff Justin Brewer and more than 100 potential plaintiffs.

Scope of Allegations

Otter.ai is accused of recording participants in Google Meet, Zoom, and Microsoft Teams meetings through its "Otter Notetaker" service, regardless of whether participants are Otter users. The lawsuit highlights that non-users have no opportunity to grant or deny consent. The complaint argues that Otter shifts legal responsibility to its customers rather than obtaining consent directly, exposing participants to unauthorized data use.

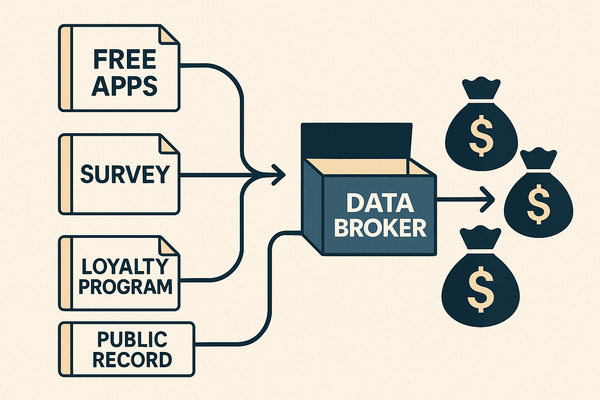

Types of Data Collected

The complaint emphasizes that Otter.ai uses voice recordings, transcripts, and potentially confidential meeting content to train its AI systems. Although Otter claims to use de-identified data, the lawsuit argues that de-identification methods are unreliable, and sensitive information is retained for an indefinite period. Data may include privileged communications, confidential business discussions, and personal identifiers shared in meetings.

Who is Affected

All participants in meetings recorded by Otter.ai, including individuals who are not Otter users, may be affected. This includes employees, business partners, clients, and other stakeholders in enterprises where Otter.ai is deployed. The lawsuit raises concerns about the use of such data to financially benefit the company without adequate safeguards or transparency.

What Affected Individuals Can Do

Individuals concerned about exposure through transcription tools should:

- Request explicit disclosure and consent for any meeting recordings.

- Exercise the right to stop or leave calls where unauthorized transcription is occurring.

- Review organizational policies for the use of transcription technology in sensitive contexts such as legal, HR, or executive meetings.

- Push for adoption of clear data governance, transparency, and employee training on ethical usage of recorded information.

- Seek legal recourse if privacy rights are violated under state or federal law.

Industry-Wide Implications

Experts warn that transcription applications introduce risks not present in traditional recording systems, since vendors retain access to recordings for training AI models. Similar lawsuits have emerged against other voice assistants, highlighting the growing ethical and legal challenges of AI-driven transcription. Enterprises are advised to balance convenience with accountability by prioritizing transparency, proper consent, and privacy safeguards.

Protecting Privacy Online

This lawsuit underscores the growing risks associated with AI transcription services. Individuals and organizations can take proactive steps to safeguard sensitive information and personal privacy. Strengthening personal privacy protection begins with choosing tools designed with transparency and user control. Sign up for Reklaim Protect to improve data privacy online and reduce risks associated with unauthorized data collection.